Cloud computing is the technique of providing services as resources and Amazon Web Services(AWS) is a well-known cloud computing platform run by Amazon, a popular multinational trading company. Knowledge of cloud is a plus for freshers and even professionals to add a good weightage to resumes and help get a well-paid job. In this article, I would be a briefing on how one can build an AWS face detection application in just a few hours.

Get Started

In order to begin with you need to have an AWS account. Visit https://aws.amazon.com/console/ and sign in with your account credentials if you have one already or else go to ‘Create a new AWS account’ and fill in the details. Once done, you need to provide your card details. This is necessary only for making an account and not at all needed for this project, developing this project won’t cost you at all. The preferred card would be VISA or MasterCard. Once after filling in the details Rs.1-2 will be deducted from your account just for verification and will be then refunded. It will maximum take 24hours to activate your account on AWS. I had worked on this project under some amazing trainers from Ethnus Codemithra and as for me, it took only 15 minutes to get my account activated. So it depends on the card used and the details filled. If you have any issues with activation, contact https://aws.amazon.com/contact-us/, and re-check your card details. It might take a little bit long on Rupay cards.

There are chances that you may get a call from AWS as soon as your account is activated, asking for your purpose of using the AWS platform. This is just to ensure that no one uses AWS disobeying it’s terms and services, especially to avoid illegal usages. Once after activation, you have various AWS services like EC2, Dynamo DB, S3, Rekognition, and such available for free for a period of 12 months and you won’t be charged until you are under this free tier offer. To know more visit: https://aws.amazon.com/free/.

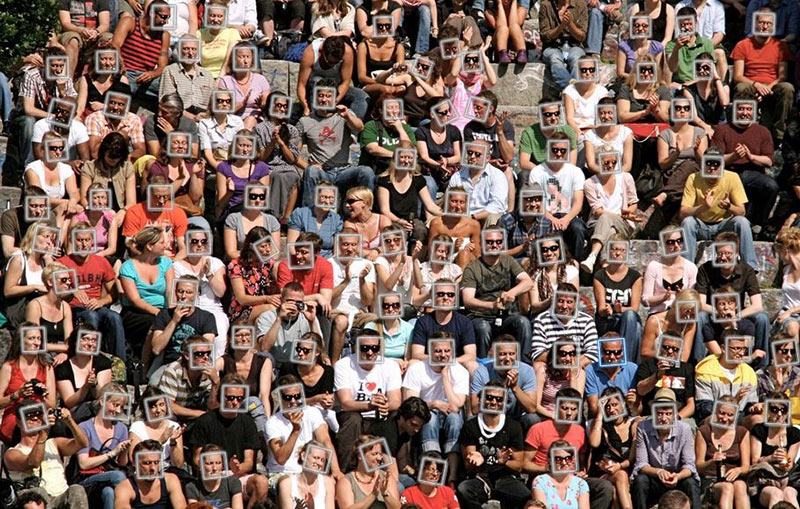

My instructor used to always suggest that I check on the services in AWS that come under AWS free tier and this is very much necessary so as to get a hang of what actually we are gonna to do in this project. For a complete beginner, I would suggest checking on AWS EC2, S3, and Rekognition. There are various other services available but these services form the main architecture of our application. AWS EC2 is a service for launching instances or servers acting as virtual environments. Interacting with EC2(Elastic Compute Cloud), we will be storing a test image on S3, (Simple Storage Service) and the number of faces in this image will be detected with the help of AWS rekognition service and displayed on the terminal we are using to interact with EC2.

This application can be even made to interact with a telegram bot. I will be giving a link that has procedures to do this as we go into the depth of this article. AWS rekognition is the main service of this application that does the work of face detection and one of my favorite services. I was left dumb stuck when I initially explored it, as its an AI-based service for recognizing various aspects of an image and is a computer vision cloud software offered as a service to AWS users. I always wondered why the word “Rekognition”? Why the letter ‘k’ in place of ‘c’, after some research I found that rekognition has its own meaning, being a german word it means the authenticity of a person, thing, or a certificate. This shows that rekognition offers accurate outputs, may it be recognition of image aspects or image comparison and is a fantastic AWS service

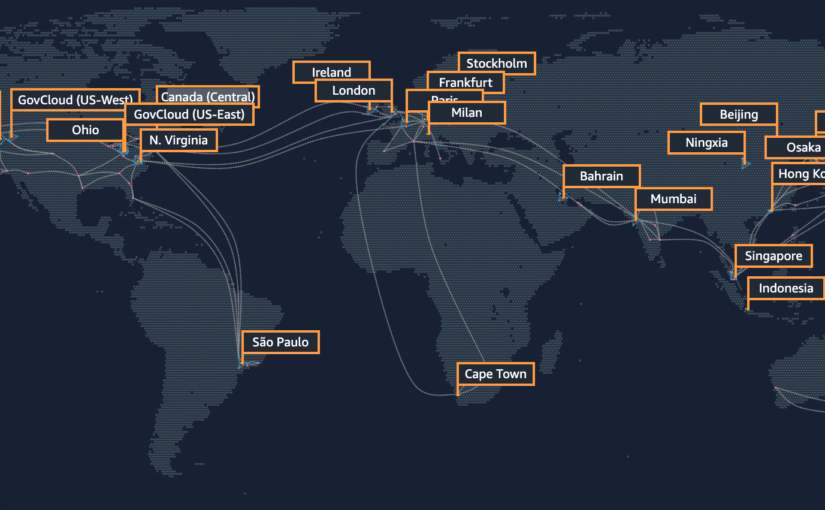

Regions

These are geographical locations that act as virtual infrastructures for its user’s applications and resources. Each region contains an availability zone that actually improves network availability of regions. Each availability zone acts as a replacement to others to compensate zone failures if in any case it occurs. For this project I would suggest we stick on to one region and one availability zone for all our services. Region:Ohio and AZ: us-east-2. Once you sign in to your account, you can see regions drop down near your account name. Either select the one I have mentioned or select any of your choice but ensure its the same throughout this project for all services. To get a clear idea on regions to visit:AmazonInfrastructure page has an image on the right with a globe on it. Click it to experience a 3D animated globe to see all the regions and availability zones, this part has always been my favorite. If you scroll to your left as soon as you enter the site, you can see the Ohio region and observe that it has 3 availability zones as of now.

Working with EC2

In order to begin developing our project, launch an EC2 instance, the steps are as follows:

- Sign in to AWS management console

- In services, section go to EC2

- Press on launch instance -> launch instance

- Choose a system image (the operating system for your server- suggested to choose first one(linux- 64bit x86) that comes under free tier)

- Instance type- t2.micro that comes under free tier

- No changes to be made in next step(configuring instance details)

- Add storage- make the size to 8gb

- No changes needed to be made in add tags step but this step is just for naming your instance, hence optionally you can add as well, for that press add tag give key as name and value as per your preference which will be the name for your instance, this is completely optional and useful when one launches multiple instances, suggested is to keep this step with no changes made.

- Configure security group- select create a new security group and leave everything default. Note that type is SSH, the protocol is TCP, the port is 22, and even note down the security group name. You can add an optional description.

- Review instance launch- check all the details and press launch

- In the pop up appearing, select create a new key pair and give a name as per your preference for eg: aws-sharanya-key and press download key pair. Every instance needs a private-public key pair for interacting with it, once it’s launched. The downloaded file will be in .pem format which you need to convert to .ppk later.

Now your instance will be launched soon. In EC2 services, there will be a new instance that will catch your eye, check for its status to be 2/2, keep refreshing on the top right to get the screen updated. Once the state changes green and displays running you are done! You have launched your instance successfully and it’s up and running.

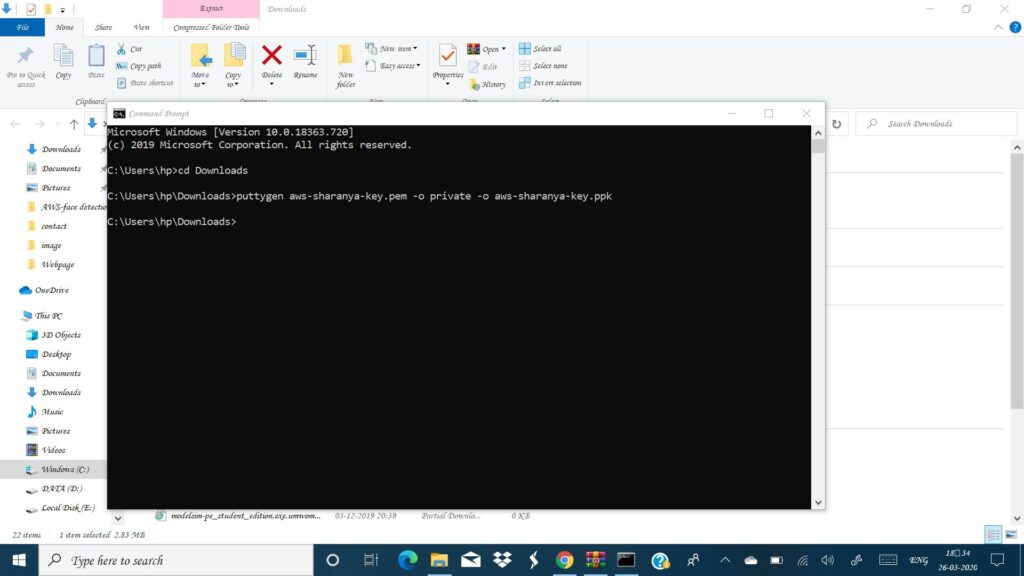

Me as a beginner, I knew that instance is nothing but like a virtual computer that I have launched in a particular region but i wondered how can i now access it? I am sure even you have the same question. It’s very simple with availability of various softwares such as putty, Window Subsystem for linux etc. In this tutorial we will be using putty. For more info on how to connect to your instance visit:Connect to linux EC2. Download putty and puttygen from putty.org and puttygen.com respectively. The latter is needed for extension conversion of your private key file. After download add path to system variables and type this command on your power shell/terminal puttygen private_key_name.pem -o private -o private_key_name.ppk. This command is used by puttygen software and converts your .pem file to .ppk. Remember that the key pair you downloaded while launching your instance was a pem file and putty needs a ppk file for connecting to your instance.

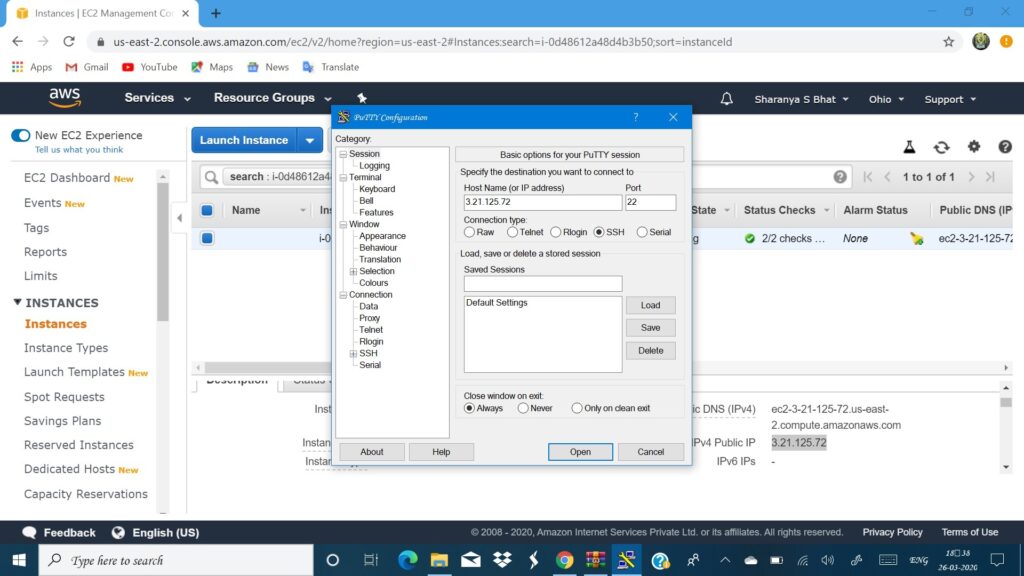

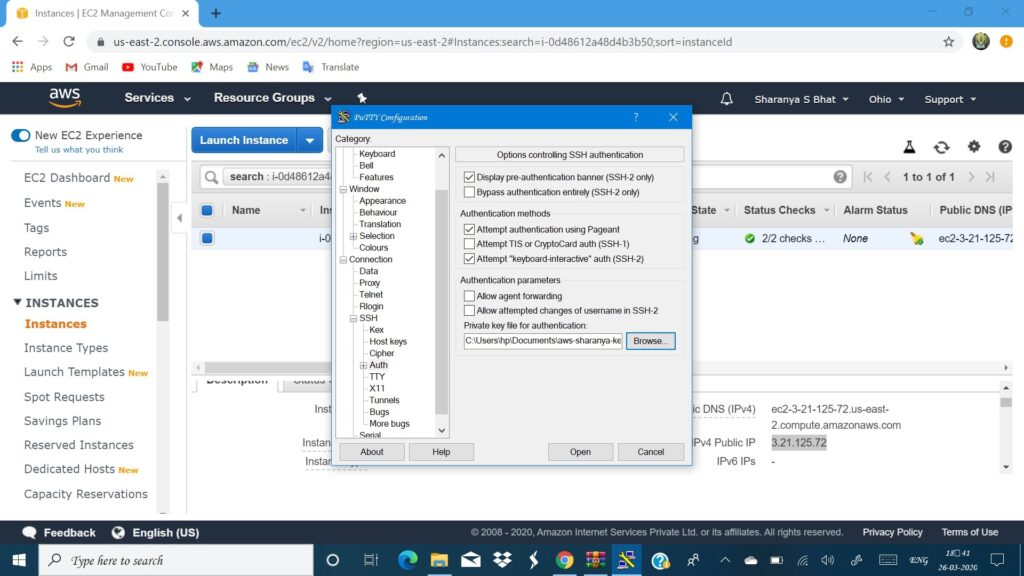

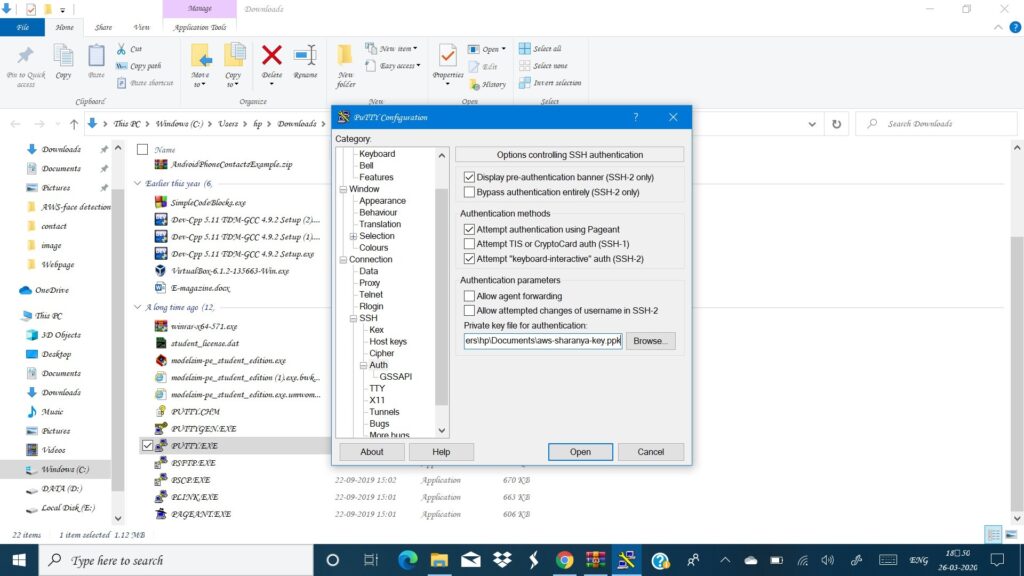

In the bottom of your EC2 service portal you will find a IPv4 public IP, copy that and launch putty. Paste it in the IP address column, select the connection type as SSH and then go to the left panel SSH->Auth there press browse and give the path to your private key ppk file and press open. The steps are as shown:

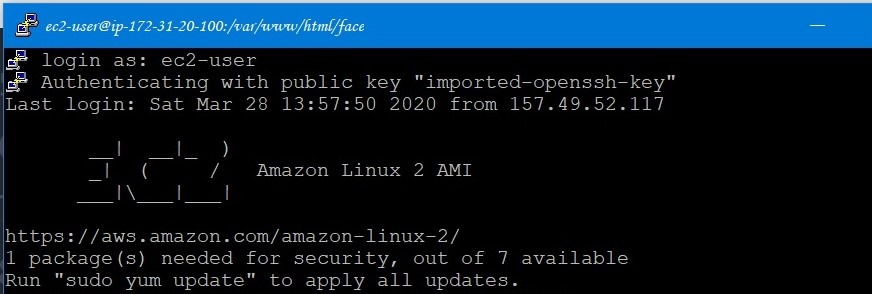

A putty terminal will pop up and it will ask you to give your username. Type in ec2-user. You should now see something like this:

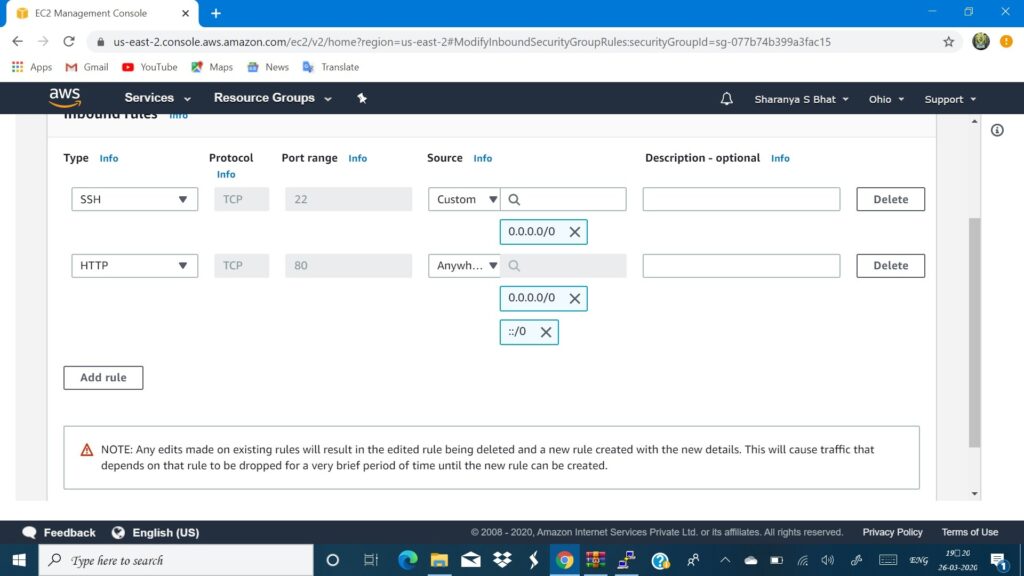

For this project to be connected to the telegram bot, you need to have https installed on your instance and hence type in the command sudo yum install httpd. Once you see a ‘Complete!’, message on your terminal proceeds to type sudo service httpd start. In order to check the status of your httpd server, type sudo service httpd status. Now you should see ‘active(running)’ written in green. Your httpd server is up and running and will allow https traffic through your instance to display the html and php files saved on your instance directly on to your web browser. But this would happen only after you set the inbound rule for your instance. For that go to the left navigation pane -> security group -> select the security group for your instance which you had noted during instance launch and select edit inbound rules. Now you can notice that your instance is set to allow only SSH traffic and you need to enable it to allow http traffic as well. Press add rule and select HTTP from drop-down and set source to ‘anywhere’ and save. This step is not compulsory if you are limiting your project to work on a terminal interface. If you want to connect the entire architecture to telegram bot then you have to compulsorily follow this.

To get a clear idea on EC2 visit:Amazon EC2

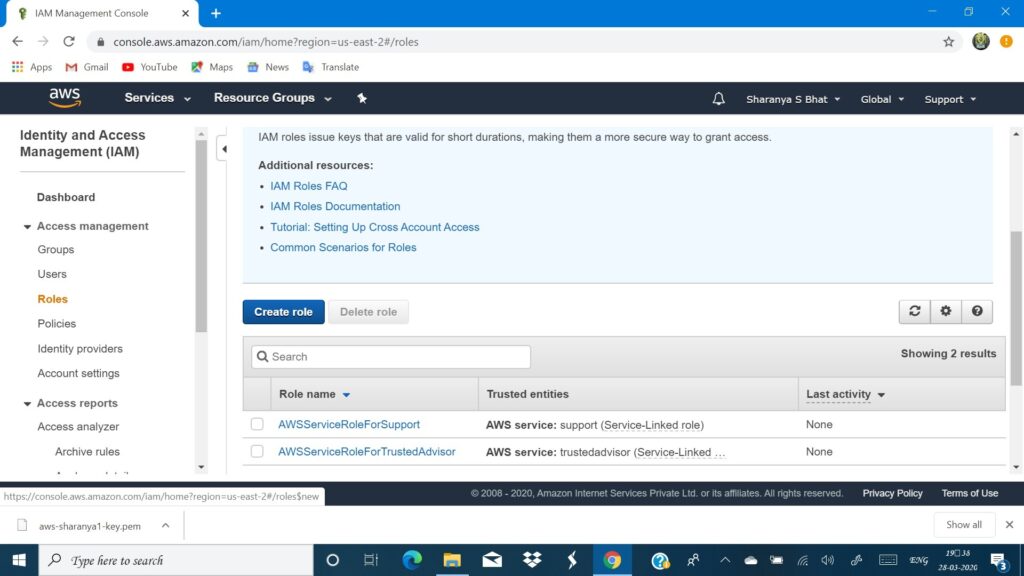

Working with Roles

Identity Access Management, shortly called as IAM enables you to manage access to various AWS services with full security, you can decide the users and groups of AWS and the main reason that we are using this free tier eligible service here is to make EC2-S3-Rekognition interact with each other. This is a must-know service for a beginner. IAM roles can be attached to policies and particular policies allow various services and resources of AWS to interact with each other. Here we will be using ‘AmazonS3FullAccess’ and ‘AmazonRekognitionFullAccess’ policies. Attaching a role with this policy to your already launched instance is very very necessary or else you will end up only with a list of errors. Whilst I was working on this project, along with me there were so many others too as the entire project was scheduled live on youtube. Some of my friends after hours of architecture on AWS ended up with no output and no errors as well which at last led to only exasperation. Hence remember, the IAM role with the correct policy is like a key for services to unlock doors of other services.

Attaching a role is just a cakewalk, hence follow the steps carefully:

- In the services, section go to IAM from your management console after signing in.

- On the left navigation, pane go to roles

- Press create role

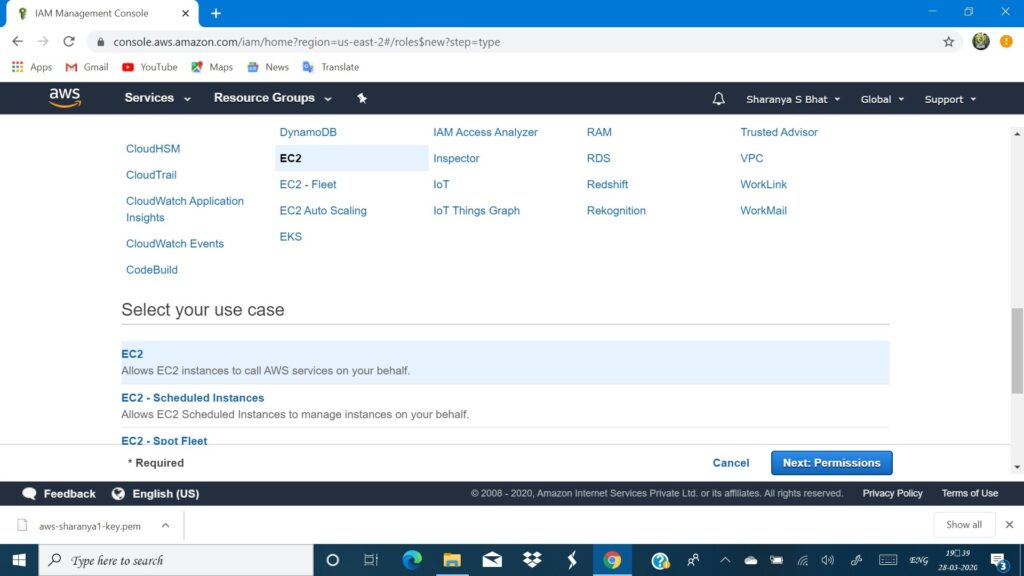

- Select EC2

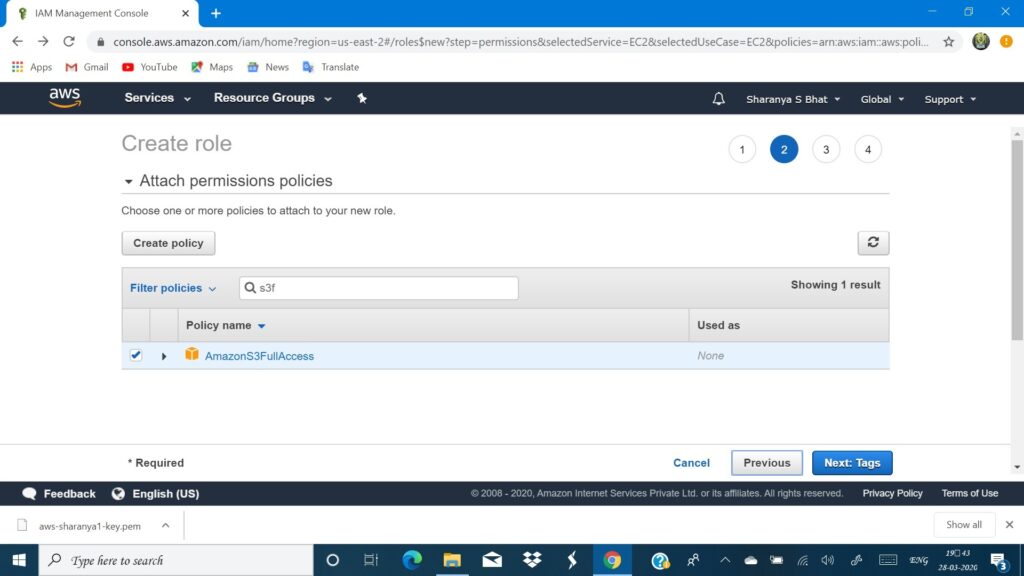

- In attaching policies type ‘s3full’ and you must be able to see ‘AmazonS3FullAccess’ select that

- Then type ‘Rekognition’ you must be able to see many rekognition policies, select ‘AmazonRekognitionFullAccess’.

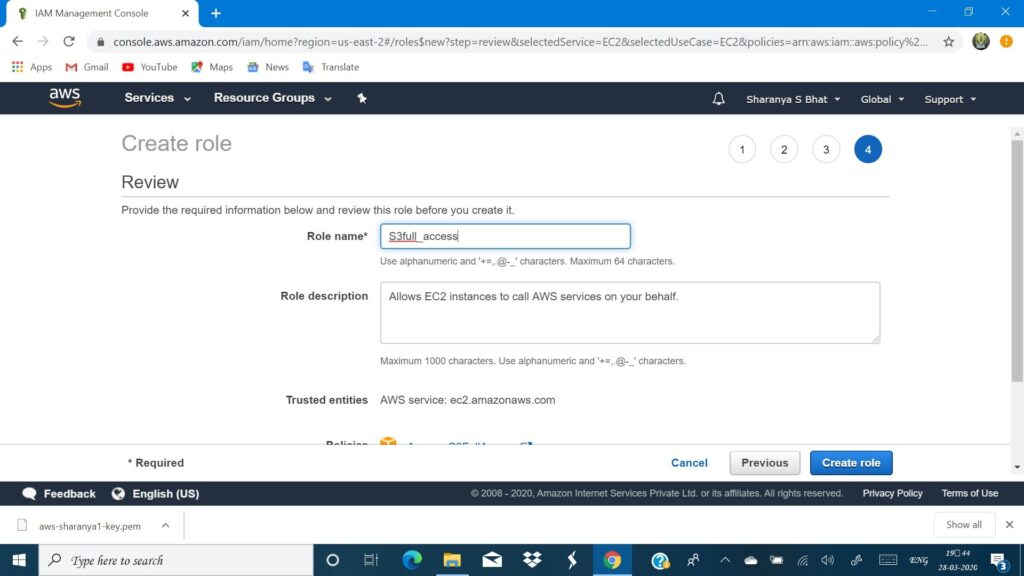

- Give a name to your role and an optional description

- Press create role

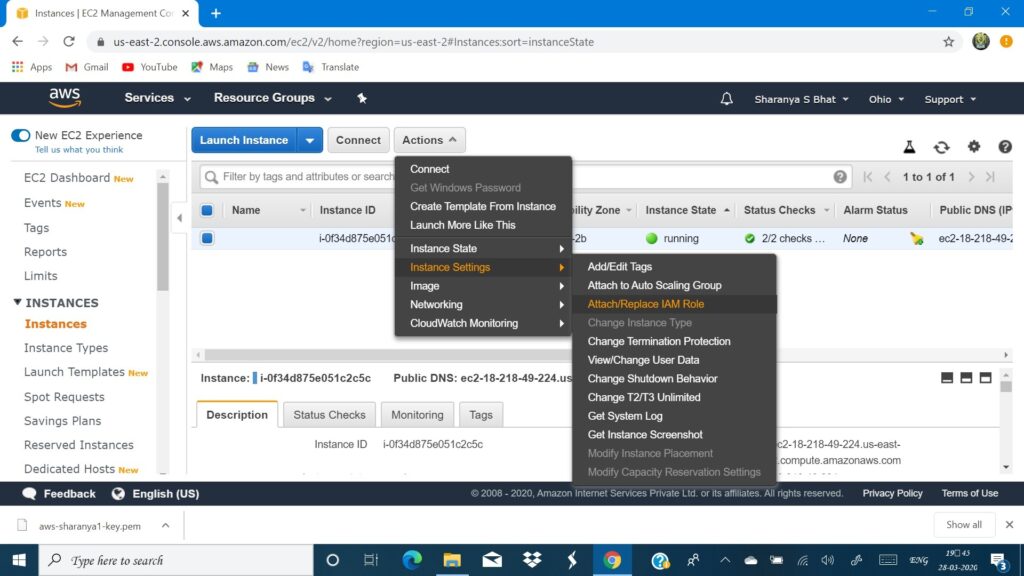

- Go to EC2 select Actions ->Instance settings -> Attach/Replace IAM roles -> type your role name and press apply.

Once you see the ‘role successfully attached’ message that means you have now given a key to EC2, to access S3 and Rekognition. To know more about IAM visit: AWS IAM

To be more clear go through, Steps for attaching roles-as shown:

Working with S3 from EC2

Simple Storage Service can be accessed directly from the services section and files can be uploaded to containers termed as buckets. In this tutorial, we will be uploading our file using EC2. Go to S3 from the services section and press the create bucket option. Type a unique name for it, select availability zone, and press create. The region must be the same as the region you used for other services and if you are following me in this aspect, then that would be Ohio and the availability zone would be us-east-2. Once your bucket is created, make it public. visit: AWS S3Bucket.To know how to do so. Now it is the container, ready to take data as objects. Which we would be uploading from EC2. To know more about how to use an S3 visit: AWS S3.

Connect to EC2 from putty and type the command sudo vim install php. The main code base that makes the entire architecture of our application work is written in php hence do not forget this step.

Once the installation is complete, type curl -sS https://getcomposer.org/installer | php then type cd/var/www/html and when you are inside the directory type sudo mkdir face, this is the folder where we would be uploading our sample image for detecting faces. Download this file Face detection app codebase and rest all the commands are clearly mentioned in this. Do remember to change the bucket and image name in the codebase and ensure that the availability zone is the same as your bucket’s availability zone.

You must have noticed a command sudo mv b97ea33b5842c7894b804923c6c05580.jpg sample.jpg after the wget command, this command apparently means that you have saved your image as sample.jpg you can change it to any file name as per your wish. The code base actually uploads your image saved in the face folder, to S3 which is stored in your bucket and is accessed by AWS rekogniton service to detect faces. Henceforth the bucket and image name must be correctly mentioned and is a very important step. My friends ended up uprooting their hairs on their heads for the errors that popped up, finally understanding that the bucket name was not changed.

On your putty terminal type sudo vim index.php this command opens the vim editor and here you can copy paste your code that you just now downloaded. In order to paste your code press ‘i’ on your keyboard, you can now see –INSERT– appearing on your editor, once done type :wq which means write and quit. Now type sudo php index.php command to execute the index.php file. You must get an output with a line saying ‘image upload done your image url…’ and ‘totally there are 9 faces’. This output is from S3 and Rekognition respectively. This output may not appear at all or give an eerie error if you have not executed the above commands and commands from the file correctly.

Cheers! We have built our face detection application on a terminal interface.

Terminating Instance and clearing S3

As you are done with your project, before erasing it all from AWS please do take screenshots of the steps and push it to github repository in a document format as you can present the repo link to your interviewer. Now the first question that might have appeared in your mind is, why to erase the project? You might keep it as well but only for a period of 30 days on S3 and EC2 can be kept running only for 750 hours without getting billed as per free tier policy. Though the free tier is available for 12 months, it has its own limitations. The one who calls you after the activation of your AWS account will clearly explain the services and its usage limitations to you so do keep a note of it or you can browse about AWS free tier.

Go to EC2 -> Actions->Instance State ->Terminate. After this step, go to instance main page where you can see details of EC2 related resources, usage in numbers where now you should be able to see ‘0 instances running’ and there you can go to key pairs and delete the key pairs you created for connecting to EC2, go to IAM and even delete the role but most importantly go to S3 and empty the bucket.

S3->_your bucket_ ->select the image -> actions ->delete then come to S3 main portal select your bucket and delete it as well.

Video Available

A detailed step by step video is available for this project! Visit Ethnus Codemithra youtube channel and access 7-day master class videos to know the project development stages step by step. Even if you are interested in combining this architecture with a telegram bot and see the output there, the steps are clearly explained in the video by experienced instructors. For an amateur, i would recommend to compulsorily watch the video before beginning with the project.

Note: All the services mentioned here in the article come under free tier only if your account is free tier eligible. Please do have a proper knowledge on free tier eligibility of services and do not use any service that doesn’t come under it, and do stop usage of the services once after you have completed the project as per steps mentioned. Otherwise you would end up getting billed.

What's Next

AWS is an amazing cloud computing platform and take this project just as a kickstart in knowing about AWS, you can explore more and create your own amazing projects. Do not stop, this isn’t the end of cloud computing!